Never Miss an Update!

I kinda wandered into data engineering on accident. When I was a good little data analyst, I would spend some of my time optimizing queries and building automating reporting process. I learned more about how SQL databases work: that having a ton of joins slows queries down, why normalization exists for transaction databases, the benefits of partitioning. Along the way, I also discovered the glory of the cloud and how to take advantage of server-less architectures to minimize costs to complete analytical tasks. In this post I am going to discuss a analysis project I have been working on. More specifically how to utilize the AWS services S3, Lambda, and SQS to build a server-less data pipeline.

The so called “Modern Data Stack”

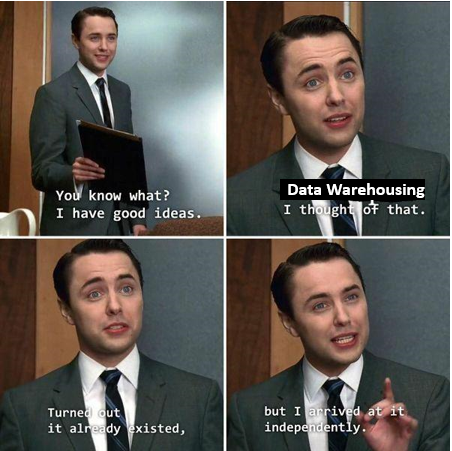

The thing about the analytics space is its tough to stay up to date on the various VC buzzwords for things that are 20 years old. The Modern Data Stack has been big on data twitter and amongst data bloggers. To me it means simplifying your stack and tooling to use a shared syntax while minimizing costs. Tools like duckdb and dbt are good examples of this (highly endorse). I imagine I’m not the only one who is much faster at doing data transformations in SQL vs pandas, dplyer, spark. Tools that support SQL syntax speeds up a lot of tedious mechanical work and leaves more time for higher level analysis.

SQL is Lindy

Cost minimization is so important for data teams because it is difficult to directly tie their outputs to revenue/profits. Of course our work is import and the business collapse without our novel and timely insights. But it is easy to link product and sales roles to money coming in. Frugal data engineering is likely going to be how its gonna be going forward, unless the 0 interest rate era returns.

Why S3 and AWS?

Cloud storage is pretty cheap these days. They get you is processing all your scanning data which at scale can be quite significant. S3 is great because of its high performance, scalability, and durability. Lets say you are a start-up, small business, or a hustler and can’t afford to build out and maintain an enterprise level data warehouse (Snowflake, Redshift, Databricks). An S3 data lake is a perfect solution to use if you’re just building out reporting infrastructure without breaking the bank. It will also serve as a excellent foundation in a more complex reporting ecosystem that you can grow into later.

Out of the big three cloud platforms (AWS, Azure, GCP), I find AWS to be the most intuitive and best performing. Google’s Bigquery is pretty awesome, but I have found the AWS server-less services to be the easiest way to get something working.

Architecture

I posted a PowerBI dashboard I made of the US Real Estate market. I initially built the dashboard using local csv files. This made refreshing the dashboard a nightmare and thus unlikely to happen. So I built out an fully automated server less data pipeline that loads into a Data Lake that PowerBI then reads from. Several of my local web scraping scripts also load into this Bucket.

Services Used

- EventBridge – Trigger that sends a message to lambda 1 to start the process. Ex. Cron Job (30 9 ? * Mon *) = Every Monday morning at 9 30.

- Lambda 1 – Function that iterates through all data series and sends a message to the queue for processing.

- Parameter Store – Securley stores the FRED API key so the lambda function can pull it in.

- SQS – Queue that sends and receives messages between Lambdas.

- Lambda 2 – Refreshes data from FRED API and updates S3.

- S3 – Stores all data in parquet format (cost efficiency) using Prefixes for organization. Ex. s3://econ-datalake/FRED/NFCI.parquet

- Users – I created two IAM users that can write and read files for this particular S3 bucket.

- Write User – To be used by Webscraping scripts to update S3 Data

- Read User – To be used in PowerBI dashboard.

If you want the code to generate this architecture please reach out!

AWS Quirks

SQS – The SQS Fanout is a pretty popular architecture design, I didn’t use it here but its important to understand and a common use case. Essentially you can take a message from SNS and use that one message to have multiple queues to receive messages to initiate other processes. Documentation

When using SQS with Lambda, you are going to want to configure a deadletter queue that receives unprocessed events otherwise they will get lost. This will make your architecture more resilient since those events can now be reprocessed.

Basic AWS Security Stuff

Its best to go with to the source documentation. Amazon writes some good documentation. Hackers like to get into AWS environment, spin up a bunch of expensive EC2 instances, mine crypto, and leave you holding the bag. Here are some tactical things that will cover your bases.

MFA – Always set up MFA for your root account and any user that logs in to the console. Adding this security layer will make you a harder target. Documentation

Root account – Second thing you should do when you create an AWS account is create an IAM Admin account that you use for interacting with services in the console/API. This user should also have MFA set up. Documentation

VPCs – A Virtual Private Network wasn’t necessary in this use case due to the services involved. But If I had a SQL server involved in the architecture then a VPC would be required and access would need to be configured using security groups and service endpoints. Documentation

IAM – Only apply the permissions needed to accomplish a task. It can be tedious to decide what those are during the development process. One approach I have used is to give yourself full admin permissions during development then once everything works, restrict your resources to use only what they need. Documentation. Example IAM Policies can be found at the end of the post.

Reading Data off S3 Into PowerBI

You can use the following code to read parquet files off a S3 bucket into PowerBI. In the json config file you will need to store the access and secret key associated with the read_s3 user that I created.

import json

import awswrangler as wr

import boto3

with open('C://Users//Project//config.json', 'r') as f:

config = json.load(f)

# authenticate with AWS data wrangler

aws_key = config['AWS_key_s3_read']

aws_secret = config['AWS_secret_key_s3_read']

session = boto3.session.Session(aws_access_key_id=aws_key,

aws_secret_access_key=aws_secret)

def read_s3(series, session):

bucket = 's3-bucket-name'

key = f'{series}.parquet'

# Read the parquet file into a dataframe

if wr.s3.does_object_exist(f's3://{bucket}{key}', boto3_session=session):

df = wr.s3.read_parquet(path=f's3://{bucket}{key}', boto3_session=session)

return df

else:

return None

df= read_s3('/Prefix/Series', session)Did you like this post, would you like to code to build it? Or do you have another business intelligence project you need help on? Hit me up!

Sample IAM Policies

Lambda 2 Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"sqs:DeleteMessage",

"s3:PutObject",

"s3:GetObject",

"sqs:GetQueueUrl",

"sqs:ReceiveMessage",

"sqs:GetQueueAttributes",

"s3:ListBucket",

"logs:CreateLogGroup"

],

"Resource": [

"arn:aws:sqs:us-east-1:acct-num:fred-series-queue",

"arn:aws:logs:us-east-1:acct-num:*",

"arn:aws:s3:::bucket/*",

"arn:aws:s3:::bucket"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"logs:CreateLogStream",

"logs:PutLogEvents",

"ssm:GetParameter"

],

"Resource": [

"arn:aws:logs:us-east-1:acct-num:log-group:/aws/lambda/get_fred_data_series:*",

"arn:aws:ssm:us-east-1:acct-num:parameter/fredApiKey"

]

}

]

}S3 Read User Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::Bucket/*",

"arn:aws:s3:::Bucket"

]

}

]

}S3 Write User Policy

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::Bucket/*",

"arn:aws:s3:::Bucket"

]

}

]

}